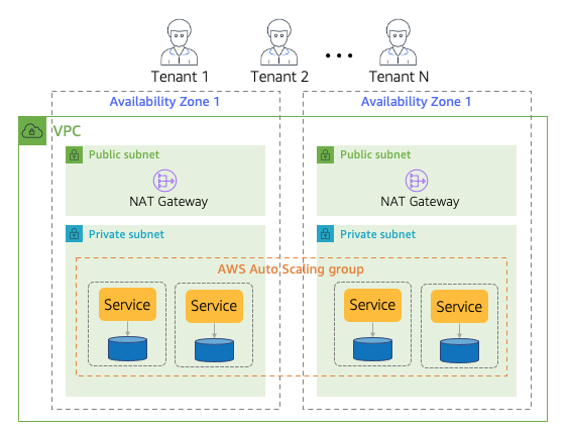

A Sample Architecture

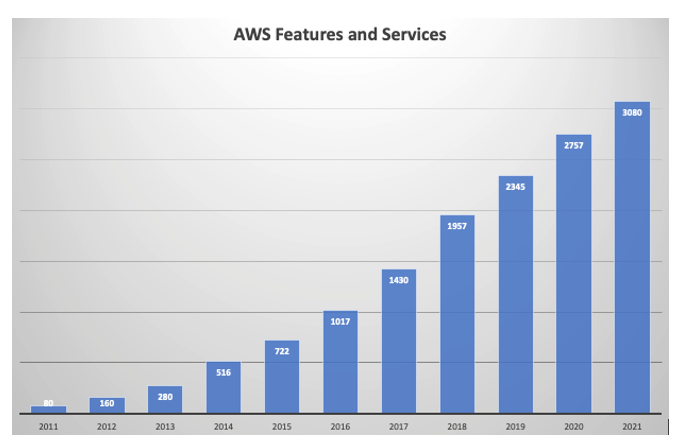

As you can imagine, the architecture of a full stack pool environment is pretty straightforward. In fact, on the surface, it doesn’t look all that unlike any classic application architecture. Figure 3-9 provides an example of a fully pooled architecture deployed in an AWS architecture.

Figure 3-9. A full stack pooled architecture

Here you’ll see I’ve included many of the same constructs that were part of our full stack silo environment. There’s a VPC for the network of our environment and it includes two Availability Zones for high availability. WIthin the VPC there are separate private and public subnets that separate the external and internal view of our resources. And, finally, within the private subnet you’ll see the placeholders for the various microservices that deliver the server side functionality of our application. These services have storage that is deployed in a pooled model and their compute is scaled horizontally using an auto-scaling group. At the top, of course, we also illustrate that this environment is being consumed by multiple tenants.

Now, in looking at this at this level of detail, you’d be hard-pressed to find anything distinctly multi-tenant about this architecture. In reality, this could be the architecture of almost any flavor of application. Multi-tenancy doesn’t really show up in a full stack pooled model as some concrete construct. The multi-tenancy of a pooled model is only seen if you look inside the run-time activity that’s happening within this environment. Every request that is being sent through this architecture is accompanied by tenant context. The infrastructure and the services must acquire and apply this context as part of every request that is sent through this experience.

Imagine, for example, a scenario where Tenant 1 makes a request to fetch an item from storage. To process that request, your multi-tenant services will need to extract the tenant context and use it to determine which items within the pooled storage are associated with Tenant 1. As I move through the upcoming chapters, you’ll see how this context ends up having a profound influence on the implementation and deployment of these services. For now, though, the key here is to understand that a full stack pooled model relies more on its run-time ability to share resources and apply tenant context where needed.

This architecture represents just one flavor of a full stack pooled model. Each technology stack (containers, serverless, relational storage, NoSQL storage, queues) can influence the footprint of the full stack pooled environment. The spirit of full stack pool remains the same across most of these experiences. Whether you’re in a Kubernetes cluster or a VPC, the basic idea here is that the resources in that environment will be pooled and will need to scale based on the collective load of all tenants.